Earlier this school year, Assistant Principal of Pupil and Personnel Services Jessica Graf emailed students and families a copy of the 2024-2025 Schoolwide Grading Policy. The policy categorizes the use of Artificial Intelligence alongside plagiarism as an issue of “Academic Integrity” and forbids students from using it “in full or in part” to complete an assignment. In addition, the policy requires students to provide teachers “have access to the history of any document that [students] work on” and says that students need to be able to show their work and explain their thought processes if academic dishonesty is suspected.

The inclusion of AI in the policy is an indication of how quickly artificial intelligence has impacted the academic world.

Instructional Support Services (ISS) and English teacher Katherine Gelbman said that she asks students to complete all their work in Google Docs so that their editing history can be reviewed, in line with the policy. She said that if there are doubts, the version history can be checked to find evidence.

“AI has a vagueness to it. It never quite answers the nuanced prompts that we ask,” said Ms. Gelbman. “So, when a response sounds like it’s not the student’s own thoughts, that is a red flag.”

According to English teacher and Classic advisor Brian Sweeney, a number of teachers worked with the school administration to craft the language for the policy. “Though some educators believe AI has a role in the classroom, we think it’s naive to ignore that it’s being misused everywhere. Anyone who shares writing that they did not compose without attributing the source of that writing lacks academic integrity. We need to say that up front and encourage students to be honest about their writing before we can consider other academic uses for AI.”

He said that the English department, which specializes in teaching writing, believes that students should produce their own work for each part of the writing process: researching, outlining, drafting, and editing.

The Classic spoke to multiple students anonymously to learn about how they might or might not be using AI for their work, despite the policy.

According to one sophomore, students who use AI for their writing, reword it, and then flock to online AI detectors such as QuillBot or GPTZero in order to ensure that what they are turning in appears human-written. Generally, if the platform reports that the paraphrased AI material seems human-written, the students will turn it in. If it still marks it as potentially AI-generated, students will paraphrase further before running it again.

“It is easy to use multiple paraphrases on top of Chat-GPT-ed content until it cannot be detected,” the sophomore said.

Another anonymous sophomore said, “I used AI heavily on an English assessment. I got caught, and because I admitted it, my teacher was very understanding.” The student shared that now they feel “paranoid” about their work in that class after getting caught.

As students continue to use AI-powered tools to assist with their assignments despite warnings, teachers and administrators at Townsend Harris High School face challenges in enforcing the policy and maintaining academic integrity.

Mr. Sweeney said that AI detectors are too unreliable at the moment to be used as definitive proof of AI use without attribution. “We can use apps that attach to Google Docs that show us important things. For example, we can see when a student just pastes a large chunk of text into a doc and submits it. That evidence combined with what an AI detector says would be enough to ask students to explain since the policy is that students should share their original docs. If they paste something from another app, it doesn’t show us the work they did to write it.”

“If [students who have been accused of using AI] can speak to the ideas that they have written about, and can justify their words, then that is sufficient to show me that they are being truthful and that the work is theirs,” Ms. Gelbman said.

Mr. McCaughey said he uses the Chrome browser extension “Brisk” to review student writing. “You can see all their keystrokes. It is like looking over the shoulder of the student while they type,” he said, not dissimilar to the “version history” section of a Google Doc. He also uses multiple AI-checkers on top of the platform because he “knows they are not totally reliable.”

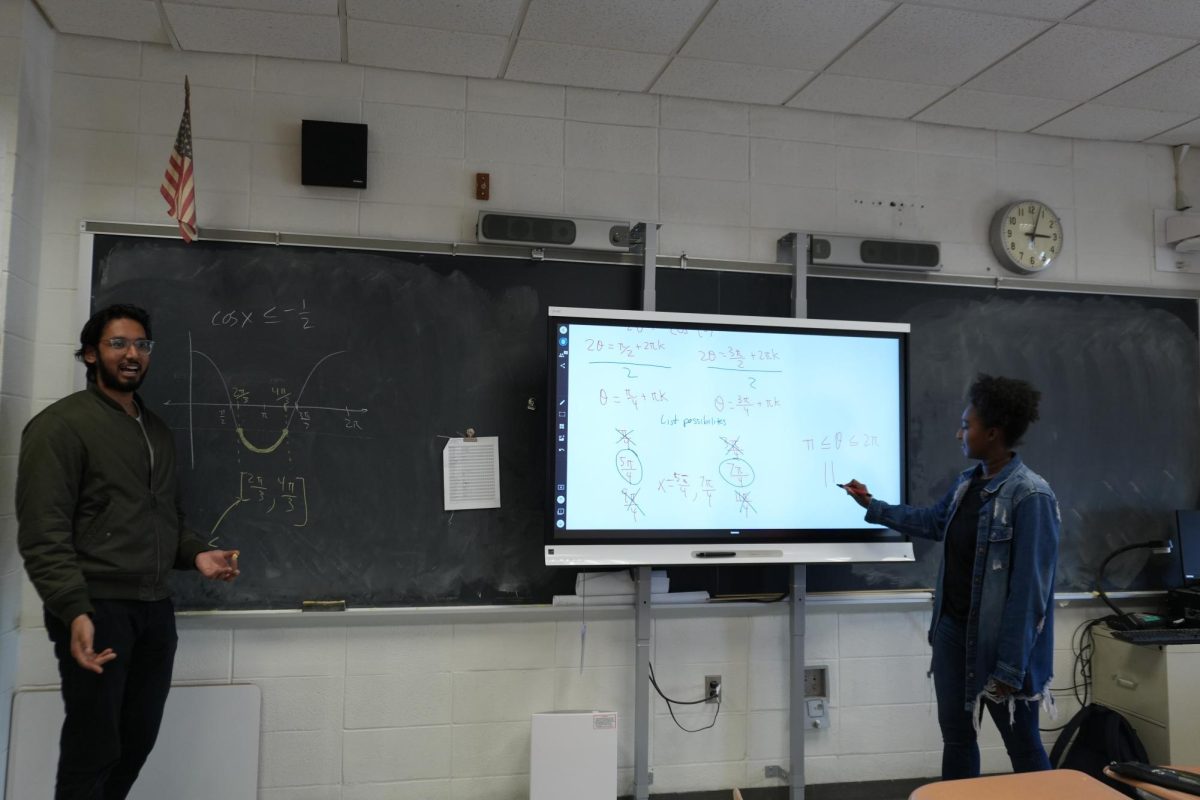

Mr. Sweeney said the simplest method to prevent students from using AI dishonestly is for teachers to assign more handwritten work where students are not turning in documents. While it is not perfect, some teachers are beginning to do this at THHS.

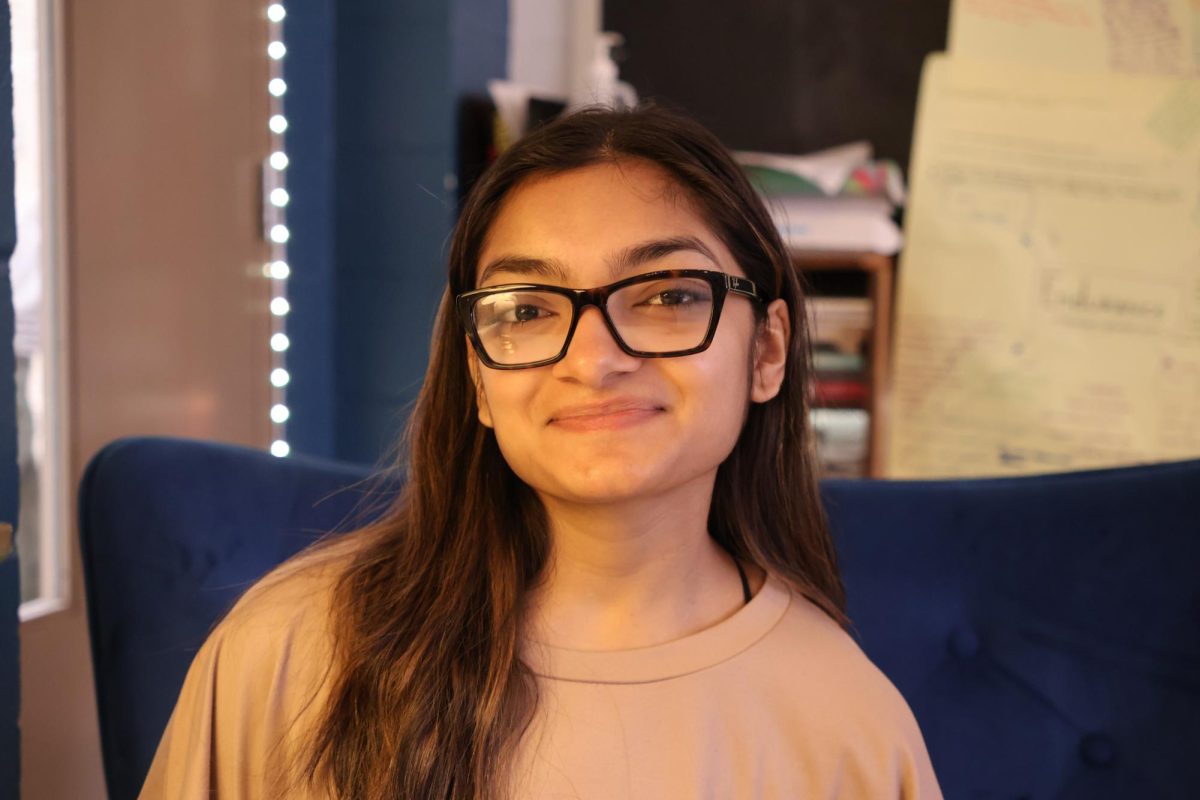

“I haven’t gotten any handwritten assignments in class, but I know some people have,” said junior Thaseena Anjum. “If anything, I had more of them prior to the pandemic.”

Some teachers assign essays to be written and submitted during class where it’s more difficult for students to use AI without getting caught.

“I realized that there are a lot of assignments that I used to have that I cannot really assign anymore because of the ease with which AI can complete the work,” said social studies teacher Francis McCaughey. “I have done more essays in class now, where they [students] type on a computer in class, and I’m monitoring them. Then I will use an AI detector or application after.”

Ms. Gelbman said she tries her best to “craft assessments that have intricacies and elements that AI cannot replace.” Similarly, Mr. Sweeney said that the English Department and Queens College updated the senior Humanities Seminar essay rubrics to more heavily weight categories of writing that AI generators are less successful at mastering (such as the use of quotations from literary texts).

While many teachers discourage the use of AI, some agree that it is a valuable tool and students should be taught about it. “It is our responsibility, as a school, to evolve and to create new ways to show students how AI should and should not be used,” said Ms. Gelbman.

“We need to figure out ways to incorporate it [AI] in class,” said Mr. McCaughey. “In AP Seminar, students are encouraged to use AI in order to break down complex texts so students can build an understanding. There are explicit policies about what we can do in the [AP] program.”

“I think that the school’s policy provides a reasonable consequence so that when AI is used in a way that constitutes ‘cheating’ the student is warned and given a fair chance to re-submit their work for partial credit,” Ms. Gelbman said. According to the policy, the first time a student is caught using AI they can redo the assignment and receive a 75 at most. Any time they are caught after that, they will get a 0 on the assignment.

One freshman who spoke to The Classic said they find the policy reasonable. “It gives students a chance to redeem themselves when caught,” the freshman said, “which I believe helps them learn [not to use AI dishonestly in the future].”

For others, it’s too reasonable. “[AI] causes students to regress in their understanding of various topics,” said Thaseena. “The policy is too lenient, in my opinion.”

Mr. McCaughey said, “It just seems crazy to me that students would rather use AI to do the work and then use it to refine it to seem human-written, rather than just doing it themselves.”

“I tell my students that we teachers are not assigning writing because we are hoping you will help humanity one day discover the perfect essay,” Mr. Sweeney said. “Writing is a form of exercise. It trains and develops your brain in crucial ways. You can ask AI to create a digital image of yourself that makes you look fit and muscular, but it doesn’t mean that you are those things. Physical exercise and healthy living is what gets you in shape. We all know that. It’s the same for your mind, and if you let AI do that hard work for you, it will hurt you in the long run.”