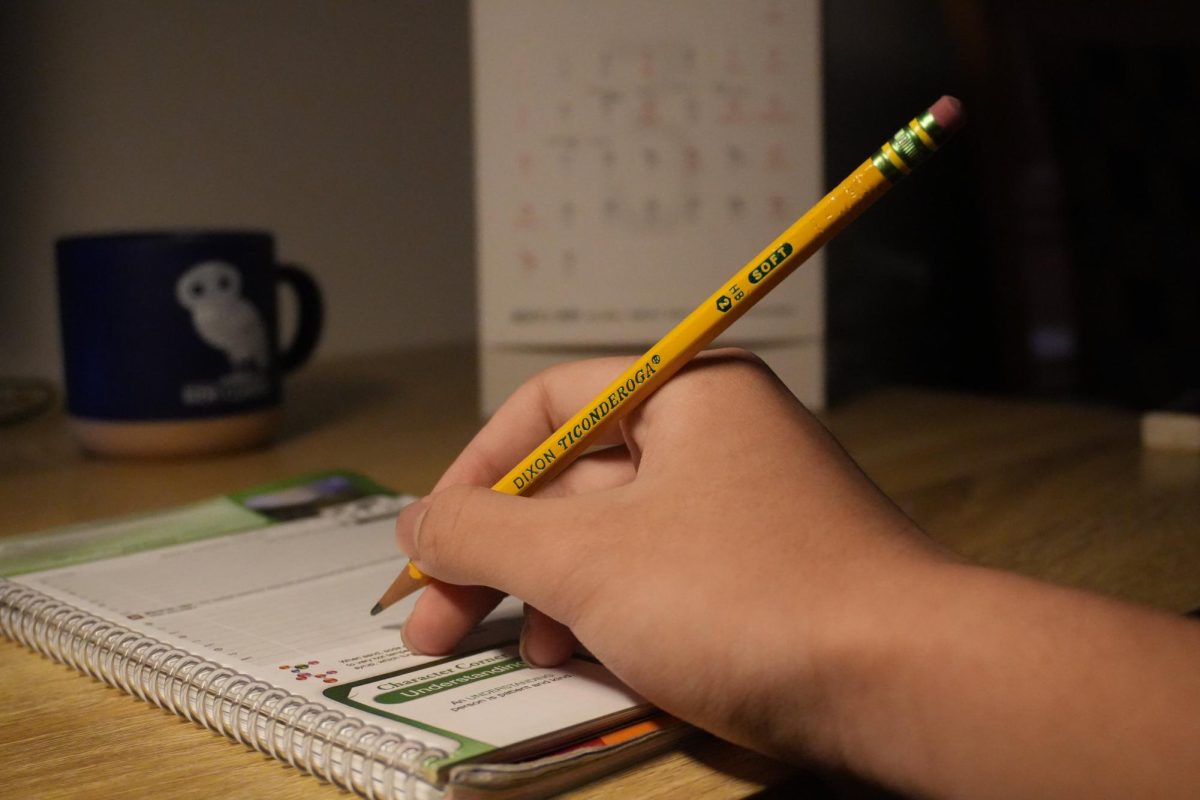

Though some view it as a cure-all, standardized testing has garnered a reputation as something problematic to the long-term state of American education. Of course, the reasonable way for the DOE to treat such a fact would be to introduce yet another standardized exam—the New York City ELA Performance Assessment—for students to tack onto their ever-growing list of reasons to loathe learning.

Administered citywide, the ninety-minute assessment was introduced as a tool to evaluate teachers and diagnose students’ abilities at the start of the year. On the accompanying exam that will take place in the spring, students are expected to score better than they did in the fall. For Townsend Harris students, who were capable of doing well on the first exam regardless, any signs of improvement are bound to be negligible, if they exist at all.

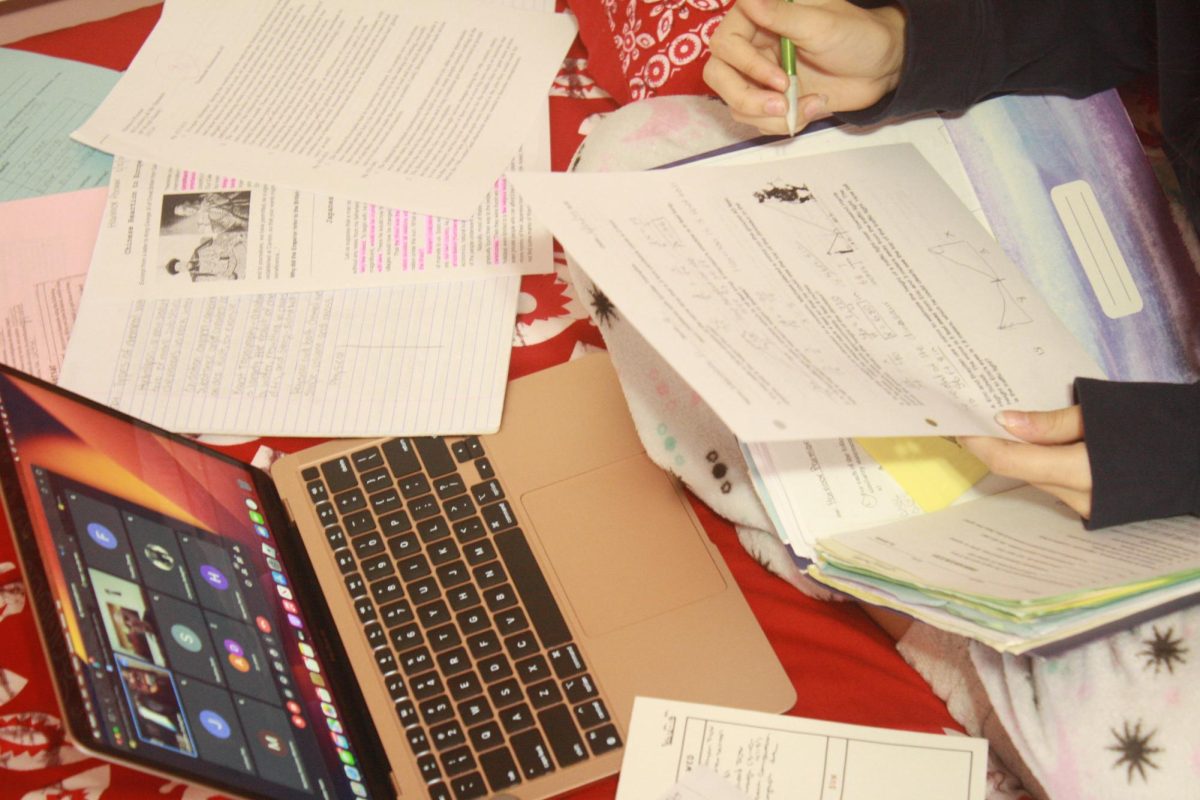

Though it succeeds in assessing proficiency to some degree, it indirectly results in a high-stakes testing scenario. If students don’t receive higher scores in the spring, it seems as if teachers didn’t do the job of helping them progress throughout the year. As a result, both sides end up pitted against each other: teachers want students to do poorly on the fall exam in order to show a false measure of improvement on the spring exam, and students, acting in the interest of a grade that teachers must count, aim to do well, in spite of what this means for the teacher. Isn’t this contrary to the ideals of education? Should teachers want students to perform poorly on this exam so they can show progress later? Should students have to feel guilty for setting their teachers up for failure by performing too well in the fall and not showing enough progress in the spring?

To make matters worse, this ELA assessment is not likely to be the only new “local” assessment; since the new teacher evaluations demand scores tied to all teachers, the DOE must make far more exams. The assumption that a few exams could offer comprehensive insight on teachers is false, and to have students take them wastes time that could be spent learning about something to a greater effect. Along with that, now English teachers are tasked with the burden of scoring dozens of assessments on their own time, a sure sign of gratitude to those who already juggle lesson planning and essay grading outside of class.

The ELA assessment further indicates that the DOE doubts the validity of its own Regents exams. It seems as if the English Regents, and all other Regents for that matter, aren’t to be trusted as adequate assessors of students’ skills or teachers’ competence. The Regents is meant to assess that students have met the requirements for a high school education in English; isn’t that one exam enough? It’s certainly long enough.

With gaping holes in the logic of having students take this test, one would at least expect the Townsend Harris administration to explain the situation in its entirety. In a letter emailed to students on October 3, we were only told that the reasoning behind the test is to measure student progress (which all tests, presumably, aim to do). With no additional details on the “why” of this exam, the letter goes on to reassure us that we need not worry, since “our students will perform exceptionally well.”

The letter failed to detail the implications of this test for THHS faculty. Likely, the administration could not fully inform us about the nature of this test for fear of appearing to coach us for it in any way, but therein lies the problem. Everyone wants transparency, but the system itself is shady.

Assessments like this one can, at best, only assess a few factors—namely, the ability to pass a test by learning the nuances of how questions are asked, rather than learning the actual content. Though we can’t fully rid the system of standardized testing, its emphasis should be minimized as much as possible.

Above all, the firsthand responsibility of student assessment should be entrusted to teachers, and teacher competence should be evaluated on more holistic factors: how receptive students are to the teacher’s lessons in the classroom, and how well students can convey their understanding of a topic through real-world applications. One may say such aspects are too time-consuming and costly to measure, but at this point, with the amount of money and time spent creating, administering, and grading these tests, that argument is no longer valid.